WEB SCRAPING, THE POWER OF ANALYSIS

The digital transformation is predominating within companies, the current situation with the pandemic and social distancing is practically forcing all businesses/companies to have at least one web page and in the same way, it encourages web-based entrepreneurs’ growth, all this added to the constant increase of the web regarding Information (social networks, virtual encyclopedias, news, etc.) generating a large amount of information, the quality of which is mostly free use. With this in mind, how might we collect that information to give it an appropriate use? The answer to that question is web scraping.

What does web scraping consist of?

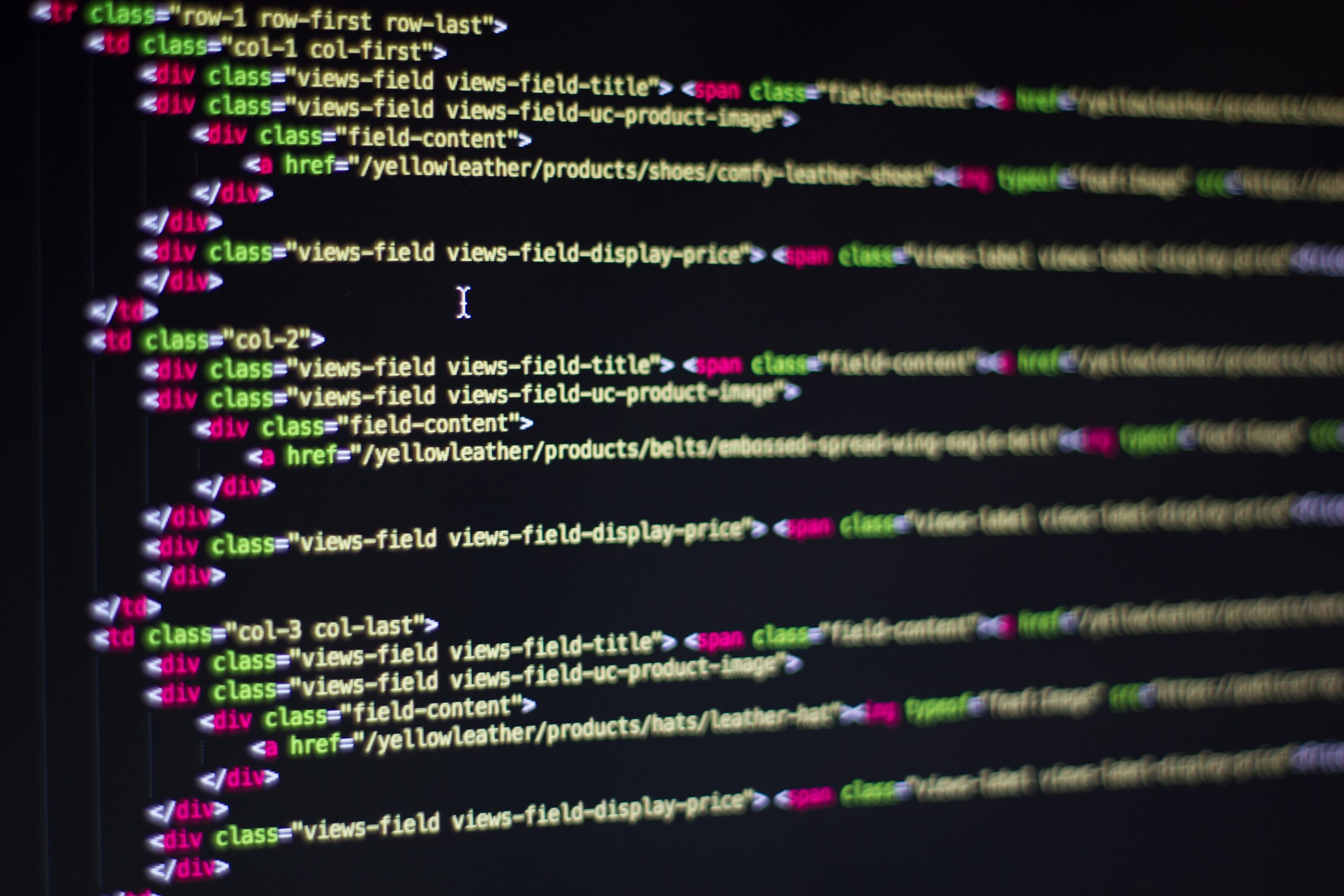

Basically it consists of coding a script that moves through the target page (as if it were a person) using the HTML of that page to transport in order to extract information for further processing. Let’s take the main Twitter page as an example, when entering for the first time we will see something like this:

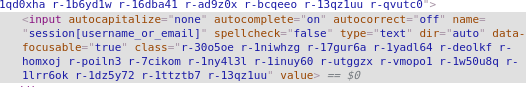

If we look at the composition of this page using the element inspection tool (Ctrl + Shift + I), when clicking on the text fields we will obtain the following:

Basically it consists of coding a script that moves through the target page (as if it were a person) using the HTML of that page to transport in order to extract information for further processing.

Let’s take the main Twitter page as an example, when entering for the first time we will see something like this:

At first glance, it can be complex, but we just need to know that it is being used an input and each input has a defined name, these names will be the identifier used to “point” to that element and enter the credentials as if it were a normal user, with this, it would be possible to enter the said page and the analysis used is the same for all cases.

What is this information extraction for?

In a world in which people are more connected than ever, information is very valuable and taking into account the variety of web pages, this process has many approaches:

- Job search, implementations have been easily created that extract information from pages such as LinkedIn or Twitter that are related to a job offer, and then specify that information in a spreadsheet that can be analyzed later.

- Market analysis, extracting information about a series of products to know their price or characteristics and try to obtain a competitive advantage in the market. Similarly, it can obtain information about potential customers to try to guide them to a service or a product made.

- Training based on patterns, taking into account the information that can be obtained from social networks, this approach has different purposes, one of them could be the prediction of people’s behavior, processing the data affected by natural language processing techniques (NLP) and detect the presence of bots based on the used grammar, train a virtual assistant according to the vocabulary used in the networks or know what people think about their service.

These are just some examples of its use, it all depends on the use you give it. In upcoming blogs, we will be digging more into this methodology employing Puppeteer as the main tool and practical examples about its uses.

Posted by

Juan Rambal – Backend Developer